This is a great question. I’m sure a lot of folks watch the evening news, check their favorite weather app or even read an update here on the blog and think, ‘Wait, didn’t it say something different yesterday?’

It probably did.

There is a lot that goes into making a weather forecast. And really, there are three big things that cause a weather forecast to change.

- Initial conditions change

- Model biases skew data

- Humans are all different and all indecisive

All of them weight equally when building a forecast. And it is the reason why a TV station may have one forecast, an app shows another and this blog has something completely different.

Initial Conditions

Just what the heck are those, right? Initial conditions is the way the atmosphere looks right now. At this very second. Sort of.

Satellites are actively monitoring the atmosphere all day and all night. Polar-orbiting satellites are taking measurements of the atmosphere at every point around the globe and that data is helping ot make model guidance more accurate.

While they really do help, they can only do so much. So, the National weather Service launches weather balloons to get an idea about what the atmosphere is like over a particular spot. But we don’t release these hourly, or even every three hours.

These are released twice per day.

And they aren’t released everywhere. We have about 75 places across North America that release weather balloons on any given day at any given time.

You may be thinking, ‘that doesn’t seem like enough‘ and you would be 100-percent correct. It isn’t. In order to accurate understand what the atmosphere looks like, we would need to have many more launch sites.

Imagine, if you will, you were brought into a room of Kindergarteners. One kid from each state. And you asked them “What is your favorite food?” And wrote down all the answers.

Then you went into a room with a Big Wig from large corporation that makes food for kids. And they ask: What kind of food does every kindergartner in America like?

That is the problem we face with obtaining initial conditions from these weather balloons.

Based on the map above, we don’t know what the atmosphere is doing in Houston. Nor Hattiesburg. Nor Mobile. Nor in Ames, Iowa, Sante Fe, New Mexico, nor in Syracuse, New York.

That said, we can grab data from other areas with a third process: the front of airplanes!

But that still leaves out a lot of data. What about places with no major airport? Places like Glendive, Montana and Burlington, Kansas are going to feel pretty left out. That is why the polar-orbiting satellites are so important. Those satellites fill in the gaps – not only across America, but across the globe. The helps when we start plugging in numbers to the computer models.

But there is still a need for models to estimate numbers and data where there is no actual data available.

That is why I am always saying, if you want better forecasts we need to fund the NWS more. More weather balloons in more places means more accurate data being fed into the models

Model Biases? How is a computer biased?

Computers can be fickle. Anyone who has every used any sort of technology has expiereince with a misbehaving computational device.

But when it comes to computer weather models, they are pretty reliable.

I heard a mathematician say – I’m going to paraphrase a bit – once, “Our equations, that describe the physics of motions of molecules and the atmosphere it self, are so good that if we could take a snapshot of every molecule in the atmosphere across the entire globe, we could predict the weather forever. We would never need another weather forecast.”

I’m not smart enough to double-check the math on that. But I have to trust the analysis there.

Because our equations are pretty good. Here is just one quick equation to figure out is there is any extra spin in the atmosphere.

And equations like this are really, really reliable in predicting what we actually see..

So then, how do we ever get the forecast wrong? Most of it goes back to initial conditions. If we have to estimate those, then it doesn’t matter how good the equations are. Garbage in, garbage out. Even with high level Calculus.

The other problem is model biases.

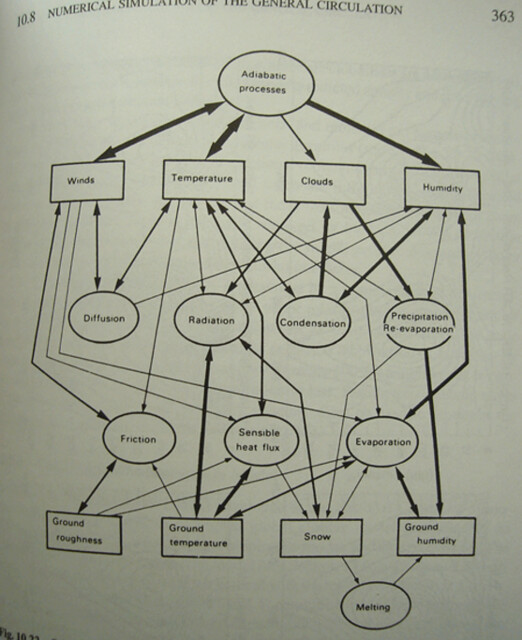

The above equation is just one step in a sea of interconnected parameters that determine, “will it rain today?”

And when humans are designing these models, certain models have knobs tweaked one way and others tweak those same knobs a different way. Sometimes this is on purpose, other times it is just a consequence of the math that goes into the model.

Other times, it might be something really, really simple.

Let’s be a computer model and make a simple forecast. We live on the star and want to know the temperature for today. Let’s say the top left, top right, bottom left, and bottom right numbers are all from weather balloon data. The rest are estimated.

It might be pretty easy to just extrapolate the data. In the above case, you could estimate the temperature would be 56 degrees. Maybe even 57 degrees.

But now we are making an estimate from an estimate. You can see how much ‘uh oh’ we are about to step in, can’t you?

When trying to produce a forecast, we humans rely on the computer models (the same ones I mentioned above) to come up with all of the numbers – even when there isn’t real, actual data to input. in order to do this, computer models split the ‘continuum of space,’ into a finite number of small volumes called “grid cells” within which it forecasts average conditions, then it approximates the smooth progression of those parameters over time.

And when a forecast point falls in-between two grid cells, the number is estimated by the computer.

In the above image where there are four grid points within the highlighted area, the computer has to estimate the initial conditions in-between. And then it has to use that estimate in the equation I showed a while back to get a number that explains if there is any spin in the atmosphere one hour from now.

If the estimation is off, even by a tiny, tiny amount you might think, that’s okay, it’s close enough, right?

Except the computer then uses that barely inaccurate number, based on an inaccurate estimation to make it’s next forecast, for one hour later, after the first one-hour forecast. And that number is now off by a bit more.

And it does this, in some cases, 384 times.

You can imagine how inaccurate it is at 384 hours out. Or, I can show you! Here is how much the forecast for Friday January 28th at 1am has changed during the last, about, 48 hours.

All of this happens because a model is biases to estimate certain things in a certain way. And when it estimates something slightly differently early (due to initial conditions changing, or other reasons), it can have drastically different outcomes down the line.

This is why certain types of weather forecasts are, in general, not any good looking out very far into the future.

DIFFERENT WEATHER FORECASTS: HOW FAR OUT ARE THEY TRUSTWORTHY

— General Temperatures: 10-20 days

— General Cloud Cover: 7-12 days

— Severe Storm Potential: 4-10 days

— Rainfall Totals: 5-8 days

__ Specific Temperatures: 4-7 days

— Hurricane location: 4-6 days

— Snowfall Potential/Totals: 2-3 days (depending on latitude, farther south, lower number of days)

— Hurricane Intensity: 1-2 days

— Severe Storms specific location: 12-48 hours

— Severe Storm Intensity: 6-36 hours

This is not a complete list, and some of this is probably a bit subjective, but in general, given model biases, these are current limits based on the Initial Conditions (or lack thereof) being input into our models

The subjective part, leads me to the last part….

Humans are different and indecisive

Meteorologists are great. I am biased, but I think they’re a cool bunch of people. Well, most of them at least.

But man, most days we struggle to make a decision. For good reason! Or two good reasons, I guess…. The two things discussed above.

Good meteorologists recognize that the initial conditions can adversely affect a forecast. Good meteorologists also recognize model biases can adversely affect a forecast. Great meteorologists take the time to determine how much those two things are impacting the final data being output by the model.

But knowing when to weigh one thing more than the other, or neither at all is difficult.

Some days a meteorologist may lean more with one model than another. Leading to a forecast of, say, 85 and sunny for the weekend. But two days later, a different model may be performing better. And that model says 75 with storms.

Trying to determine which model guidance is handling a weather pattern better than another is a tough thing to manage. Plus trying to monitor all of the different influences that go into a forecast on top of that? Often times there are more than 20 different pieces to the puzzle. Being able to identify and parse out the important from the unimportant, the good from the bad, is what differentiates a good meteorologist from a great meteorologist.

That takes a lot of time, effort, resources and experience. And thus, there are not that many great meteorologists out there.

This is another reason why if you watch TV at night and channel surf between news channels, The Weather Channel, Accuweather and Weather Nation, you may see a different forecast on each channel.

Often times I’ll hear from folks asking why I’m calling for only a few strong storms and someone else is calling for a sever weather outbreak. Or, more recently, why I’m saying a potential for snow flurries/wintry mix when everyone else is saying just rain.

Because we are all different.

And that’s not to say I’m right and others are wrong. I’ve been wrong plenty of times. And I will be again. But time, effort, resources, and experience all give each meteorologist a different lens to build a forecast.

The Bottom Line

Forecasting the weather, predicting the future, is difficult. While there are computers and a lot of math to help us, because we are limited in our initial conditions, limited with model biases, and limited by our indecision, our forecasts will never be perfect.

You might be asking, “Isn’t there a better way?”

There are ways to beat the system. Using analogs and ensembles help. I do this a lot. Analogs are nice because if ‘something’ happened one way in the past, given an atmosphere that looked a particular way, and the atmosphere looks like that again, there is a pretty good chance that the ‘something’ may happen the same way again. Ensembles take a model and change the initial conditions slightly a bunch of different ways and run the same model between 20 and 30 different times to see there there is an ‘average’ pattern that can be discerned.

But, most meteorologists get the five day forecast right about 75-percent of the time. And that is pretty good, considering all of the hoops we have to jump through along the way.

One thought on “Wx Info: Why do weather forecasts change?”

Comments are closed.